A guest article by Dr. Tim Delbrügger, Head of AI & IoT at iits-consulting and Jonathan Eschbach, Senior AI Engineer at iits-consulting

Document extraction using AI and Open Telekom Cloud

In this article you will read

- how an AI based on open source supports LLMs in tenders,

- how it monitors its own results

- and how you can experiment with AI yourself.

Artificial intelligence (AI), especially Generative AI and AI-based chatbots such as ChatGPT, have become an integral part of our everyday lives. They can be found in navigation devices, perform research tasks, or create texts. They offer an impressive range of possible applications – from answering general questions to providing support in more specialized areas.

However, there are specific requirements and challenges, particularly in the corporate environment, that make it difficult to use such AI tools easily. The following questions arise in particular:

- How can AIs use company-specific information to provide targeted answers to company-relevant questions?

- How can I ensure that the AI's answers are trustworthy?

- How can I ensure that my sensitive company data remains protected, is processed in compliance with data protection regulations and remains within Germany?

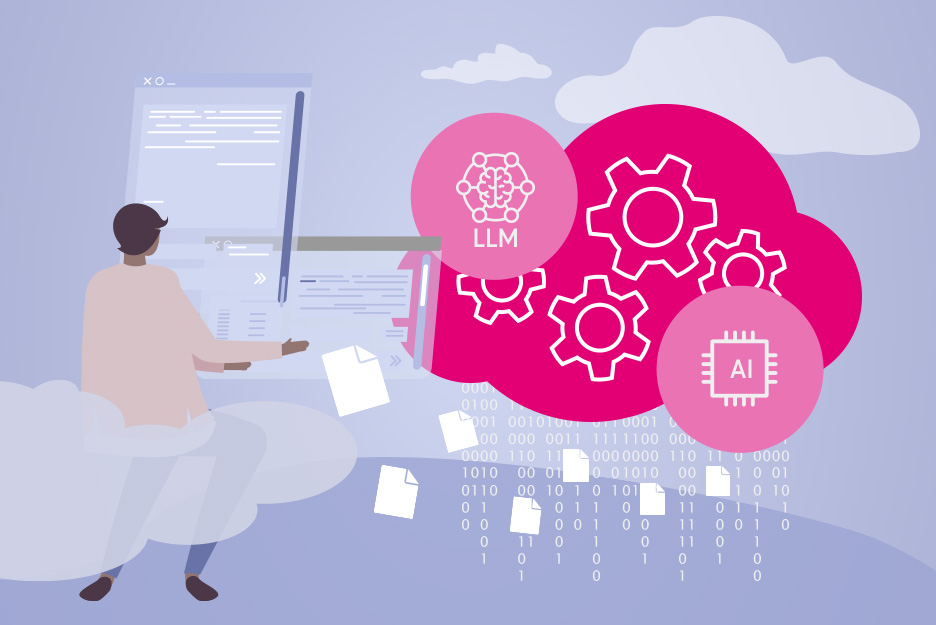

One approach to solving this problem is the use of so-called retrieval augmented generation systems (RAG). These systems make it possible to enrich language models with subject-specific data in order to generate precise and relevant answers to specific questions. The confidentiality of company information is maintained as the data is processed in controlled environments.

A common application example in the corporate environment would be the evaluation of tender documents. Let's imagine a district publishes a comprehensive document on the award conditions for fiber optic expansion with 22 pages of text and other referenced documents. Such a tender contains a lot of important information, such as deadlines, requirements, and contract terms. Participation in tenders generates substantial costs for the company. An important factor in deciding whether to participate is therefore the probability of succeeding. Extracting certain facts from the document can be very helpful here, such as the specified deadlines for submitting bids or implementation.

This is where the RAG system comes into play. With the help of such a system, large language models (LLMs) can be specifically “fed” with the document. The relevant information from the data source – in this case the tender document – is also sent to the model so that precise answers to specific questions are possible. For example, if we ask the question “What is the date of the tender deadline?”, the RAG system could then provide the answer: “15.07.2022”. It also indicates that this information can be found on page 9 of the document.

This approach shows how RAG systems can answer specific, business-relevant questions by directly accessing the data sources provided. Such a system significantly increases efficiency as it eliminates the need for time-consuming manual searches of documents. An employee should still check the facts found in order to rule out “hallucinations” of the LLM. This is the starting point for another efficiency boost that we usually ignite in our projects: self-evaluation.

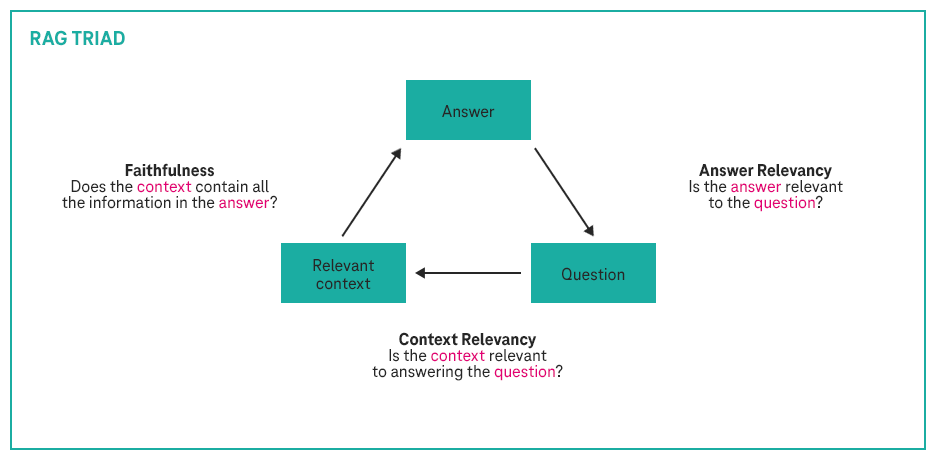

The aim of self-evaluation is for the AI itself to assess how reliable one of its statements is. In particular, “alternative facts” or “hallucinations” should be avoided. We use the following metrics at iits for this purpose:

As part of the RAG system, we have the question, the relevant context, and the answer. Through further queries to the LLM system, we ask in pairs whether the individual components match one another. These individual evaluations are part of an overall evaluation that provides us with information about the trustworthiness of the actual answer to the original question. In this way, we enable automated self-evaluation of the AI system and effectively rule out hallucination.

The question of how this solution can be implemented securely and in compliance with data protection regulations is of central importance. In a business environment, the focus is often on sensitive data, the protection of which is a top priority. In addition, European data protection regulations, in particular the GDPR, require strict measures to protect personal and company-relevant data. This is where the Open Telekom Cloud plays a crucial role.

The Open Telekom Cloud offers the unique selling point of being able to host LLMs and AI-based solutions in Germany from a German cloud provider.

This ensures that the data remains within Germany. Companies can therefore ensure that they meet the high data protection requirements and that their data is not processed in insecure or legally problematic regions.

In addition, the Open Telekom Cloud offers the scalability and flexibility that modern companies need for the use of AI. By using powerful cloud infrastructure, even large volumes of data and complex models can be processed efficiently without jeopardizing data security. Data processing is carried out in full compliance with the strict requirements of the GDPR.

The use of AI in the corporate context offers enormous advantages, especially when it comes to efficiently extracting specific information from large documents and turning it into usable answers. With the combination of RAG systems and a secure, GDPR-compliant infrastructure such as the Open Telekom Cloud, companies can exploit the full potential of AI without compromising on data security.

This integration ensures that sensitive company data remains protected, while at the same time AI-supported processes increase efficiency and save valuable time. The Open Telekom Cloud offers decisive added value here thanks to the ability to operate AI-based solutions securely and in compliance with data protection regulations in Germany.

Would you like to try out these new technologies for yourself? Then have a chat with Cloudia, the Open Telekom Cloud chatbot. Or create a trial account for IITS.AI, which covers state-of-the-art AI technology and runs in the Open Telekom Cloud.

Alternatively, if you are interested in programming your own AI applications with LLMs, you can use the T-Systems LLM Hub Playground here to get a first impression of the potential of AI. You also have the option of requesting an API key here.

How to: AI chatbot in seven weeks

Find out how companies can use the Open Telekom Cloud to develop a powerful, GDPR-compliant AI chatbot in record time.

Level up your AI and HPC Applications with NVIDIA H100 GPUs

Open Telekom Cloud introduces the next Generation of GPUs from NVIDIA. H100 brings new power to your Artificial Intelligence projects and other high performance use cases.

World Summit AI: The epicenter of artificial intelligence

All the big names in artificial intelligence meet for the World Summit AI. The Open Telekom Cloud is bringing two new use cases with it: a human counter and a landslide detector.