In this article you can read about

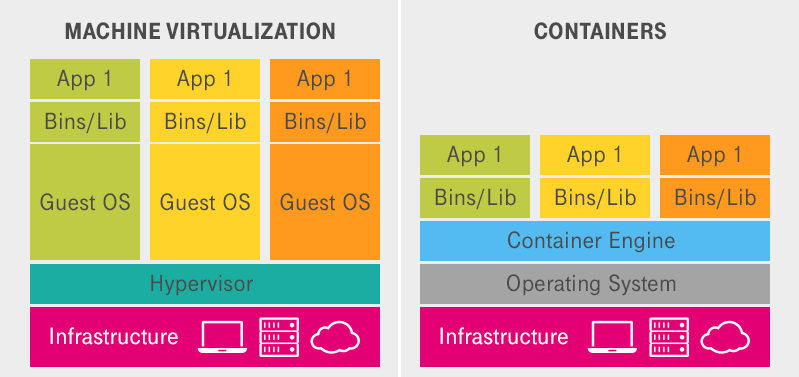

- the differences between VMs and containers,

- which of the two forms of virtualization you should use depending on the situation

- and what services the Open Telekom Cloud offers for this.

Dockers and other container-virtualisation systems are revolutionising IT at an astounding speed. They are virtually everywhere in the corporate environment and a recent trend report by DZone from October 2023 underscores this phenomenon: 88% of all company representatives state that they use containers and tools such as Docker and Kubernetes (K8). 81% use Docker and 71% use Kubernetes to manage containers in development and production.