- the purposes for which high-performance computing (HPC) from the public cloud is suitable,

- the prerequisites that must be fulfilled and

- what companies should pay attention to.

Whether load simulations in mechanical engineering, gene analyses in medicine or machine learning in the field of artificial intelligence: highly complex processes require extreme amounts of computing power. While only a few years ago such calculations were only possible with expensive hardware from supercomputers, companies can now book the corresponding capacities according to demand from the public cloud – for example from the Open Telekom Cloud. This enables companies to use highly specialized and high-performance hardware exactly as needed, so that they can process huge amounts of data in the shortest possible time and solve complex problems much faster than before. In addition, they have no need to set up and operate their own expensive hardware and thus save on high fixed costs.

But before companies use high-performance computing (HPC) from the public cloud, a number of important questions arise:

- How does HPC differ from traditional public cloud resources?

- Which scenarios is HPC useful for – and which are not?

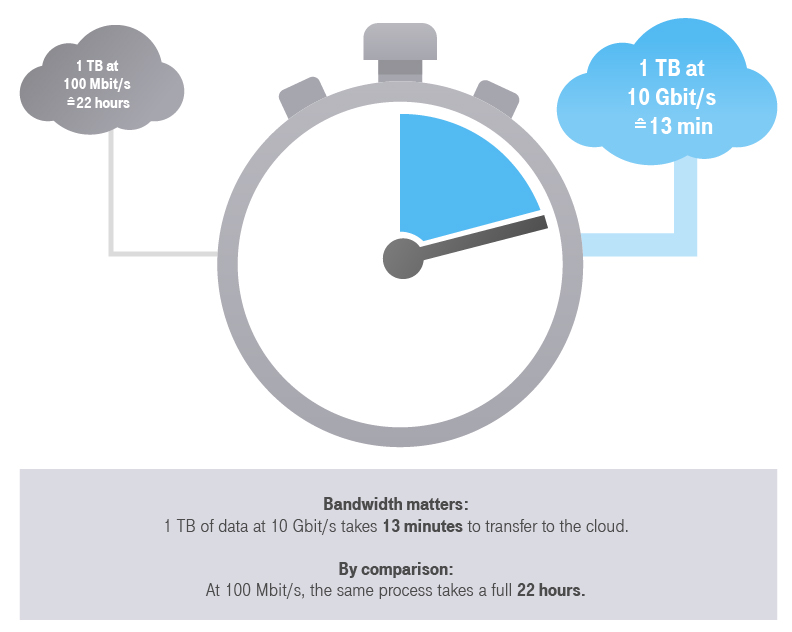

- How must the network connection be dimensioned?

- Which data should be in the cloud and which should not?

- Where is the best value for money?

- Which software and which certificates are indispensable?

- And what role do security and data protection play?